Update on AI regulation in the UK

Katherine Evans | Senior Partner

The European Union has developed comprehensive legislation which governs the use of AI within the EU. The UK by contrast has adopted a cross-sector and outcome-based framework for regulating AI, underpinned by 5 core principles.

The UK Government’s position is that the UK wants to facilitate innovation, whilst also giving the UK Government the flexibility to respond to risks and issues as they arise. Its preference is to try and strike a balance through innovation and safety by applying existing technology-neutral regulatory frameworks to AI.

The five cross-sector principles for responsible AI are:

- Safety, security and robustness

- Appropriate transparency and explainability

- Fairness

- Accountability and governance

- Contestability and redress

The UK Government’s strategy for implementing these principles is predicated on three core pillars:

- Leveraging existing regulatory authorities and frameworks

- Establishing a central function to facilitate effective risk monitoring and regulatory coordination

- Supporting innovation by piloting a multi-agency advisory service – the AI and Digital Hub

The UK has no plans to introduce a new AI regulator to oversee the implementation of the framework. Instead, existing regulators, such as the Information Commissioner’s Office (ICO), the Office of Communications (Ofcom), and the Financial Conduct Authority (FCA), have been asked to implement the five principles as they supervise AI within their respective domains. Regulators are expected to use a proportionate context-based approach, utilising existing laws and regulations.

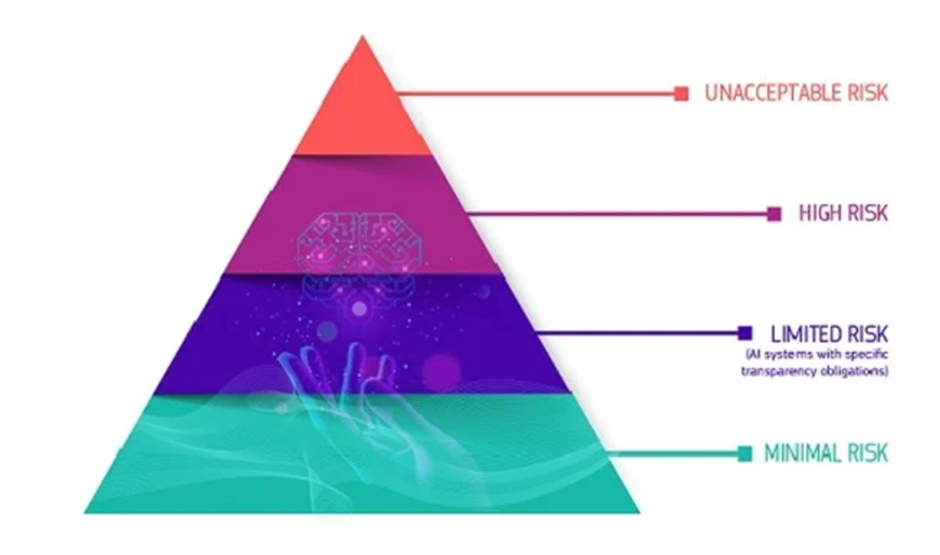

By contrast, the EU believes that a different, more cautious process is needed and is applying a horizontal risk-based framework that cuts across sectors.

Certain systems are classified as posing an unacceptable risk and will subsequently be banned, such as those capable of behavioural manipulation and social scoring. Systems that pose a high risk of violating the EU’s fundamental rights will be monitored throughout its lifecycle.

The tiers are as follows:

AI practices that pose “unacceptable risk” are expressly prohibited under the Act. These prohibited practices include marketing, providing, or using AI-based systems that:

- Use manipulative, deceptive and / or subliminal techniques to influence a person to make a decision that they otherwise would not have made in a way that causes or may cause that person or other persons significant harm.

- Exploit vulnerabilities of person(s) due to their age, disability or specific social/economic situation in order to influence the behaviour of that person in a way that causes or may cause that person or other persons significant harm.

- Use biometric data to categorise individuals based on their race, political opinions, trade union membership, religious or philosophical beliefs, sex life or sexual orientation.

- Create or expand facial recognition databases through untargeted scraping of facial images from the internet or closed-circuit television (CCTV) footage.

AI practices that pose an unacceptable risk are subject to the greatest penalties and may expose companies to fines of €35 million, or 7 percent of a company’s annual revenue; whichever is greater.

The “high risk” systems category is much more expansive than the “unacceptable risk” category and likely encompasses many AI applications already in use today. For example, high-risk applications of AI technology may include biometric identification systems, educational / vocational training or evaluation systems, employment evaluation or recruitment systems, financial evaluations or insurance-related systems.

The precise boundaries for high-risk AI technologies, however, are not yet certain. The Act clarifies that systems that “do not pose a significant risk of harm to the health, safety or fundamental rights of natural persons” generally will not be considered high-risk.

At a minimum, developers and implementers whose technology falls within the high-risk category should be prepared to comply with the following requirements of the AI Act:

- Register with the centralized EU database

- Have a compliant quality management system in place

- Maintain adequate documentation and logs

- Undergo relevant conformity assessments

- Comply with restrictions on the use of high-risk AI

- Continue to ensure regulatory compliance and be prepared to demonstrate such compliance upon request

The EU AI Act also imposes transparency obligations on the use of AI and sets forth certain restrictions on the use of general-purpose AI models. For example, the Act requires that AI systems intended to directly interact with humans be clearly marked as such, unless this is obvious under the circumstances.

In addition, general-purpose AI models with “high impact capabilities” (defined as general purpose AI models where the cumulative amount of compute used during training measured in floating point operations per second is greater than 1025 floating point operations (FLOPs)) may be subject to additional restrictions.

Among other requirements, providers of such models must maintain technical documentation of the model and training results, employ policies to comply with EU copyright laws and provide a detailed summary about the content used for training to the AI Office.

For further advice on AI in the UK and the EU, please contact Katherine Evans at katherine@mirkwoodevansvincent.com